Where is social scientific knowledge: The .pdf or the .csv?

The academic journal article as media

In a recent working paper, Limor Peer, Lilla Orr and Alexander Coppock discuss the practice of “Active Maintenance” to keep reproducibility archives in good working condition.

Today, scientists post their statistical reproduction materials online and they can be initially downloaded and re-run by a standard practitioner in their field. Peer, Orr and Coppock point out that as time passes, it becomes less likely that this will still work: software changes quickly (especially open-source software built on multiple dependencies like R) and digital bits themselves decay over time.

As someone who is obsessed with social scientific knowledge decay caused by technological change, I unsurprisingly see parallels to my work on Temporal Validity.

Peer, Orr & Coppock: The technology of social scientific knowledge production changes, making the technical synthesis of social scientific knowledge produced under different technological regimes difficult.

Me: The technology of human society changes, making the theoretical synthesis of social scientific knowledge produced under different technological regimes difficult.

More generally, I’m excited to see more work that takes seriously the project of knowledge synthesis under causal empiricism. Meta-Science is Political Methodology, and this is great example of what that looks like: statistical/empirical work about the aggregate output of social scientific knowledge, in opposition to standard political methodology that focuses on the quality of individual papers.

One criticism of this draft of the paper. I’m a fan of third-party replication for all the reasons they mention. It forces scientists to get their act together when finalizing the paper, and it allows the community to scrutinize scientific claims by examining the data and statistical procedures before they have been transformed into a “written description” to match the received form of social scientific knowledge: the academic paper.

But they don’t fully articulate the reason we want to ensure reproducibility of a given result indefinitely. There are various techniques (containerization, emulation) that will allow “current software to run on future computers,” but this becomes reproduction for reproduction’s sake. Long-term reproducibility methods that do not allow for integration with future tech are not, in my view, importantly different from a video recording of today’s computers running a given chunk of code.

The authors clearly don’t want a video; they argue that “Researchers may want to integrate legacy data with current and future computation methods in order to reuse, reassess, and reproduce results.” I think that’s right, and would add that researchers will certainly want access to the original data for purposes of meta-analysis and data fusion. But this is an argument for preserving the data, not the capacity to ru-run a given iteration of Random Forest on it.

Thinking along these lines: Where is social scientific knowledge located?

Social science is in the middle of a paradigmatic shift that forces us to re-evaluate once-settled practices. No longer is the brain of the scientist the site of knowledge synthesis. The goal of data fusion is for a non-human intelligence to serve as the site of knowledge synthesis. Causal revolutionaries have kicked this can down the road, but we need to start re-orienting social scientific practice to reflect this ultimate goal. For now, of course, I’m still using the knowledge I’ve shoved into my own brain.

tl;dr:

The social world is impossibly high-dimensional. Knowledge is a means of reducing that dimensionality. Language-based theory is the most efficient way to encode information for human knowledge synthesizers. The goal for machine knowledge synthesizers is to learn from data. Academics should store knowledge in the appropriate format for the ultimate knowledge synthesizer.

The goal of social science is to understand why people do things. To measure progress in this goal requires an objective standard: predicting what people will do. If we know why people do things and we observe their conditions, we can predict what they will do.

What follows is a convenient history of social scientific knowledge practices. I’m trying to keep this as short as possible while still causing the desired knowledge to exist in your brain; making it longer might improve the latter at the cost of the former, but that’s begging the question.

Human Knowledge: Language

Initially, humans observed other humans and came up with verbal theories about why. The sensor, processor, transmitter and receiver of this knowledge were all human brains. Oral culture means transmitting knowledge in the form of language. This is more efficient than each human observing other human behavior directly and trying to detect patterns from their own memories, within their own brains.

The goal of this transmission is to take knowledge in brain A and cause the knowledge to exist in brain B. It impossibly inefficient for humans to do this by describing exactly all of the sensory information they received. Our brains are already detecting patterns and developing theories in the process of taking in information; although we receive huge amounts of sensory stimuli every second, we have to immediately process it into mental categories to avoid being overwhelmed. Brain A uses language-based theories to more efficiently cause the knowledge to exist in brain B.

An example. Over the course of X’s life, she sees many couples meet and get married to satisfy their respective families. She sees many couples meet and fall madly in love. There is some overlap between these couples, but not much. She concludes that “<3”: the human tendency to “fall in love” is distinct from the goal of achieving social cohesion.

X might think about all of these instances, but if she wants the knowledge “<3” to exist in brain Y, it’s extremely inefficient to enumerate them. Instead, in the language of human thought (which is, helpfully, “language”), she might develop a theory:

“<3” == “The heart wants what it wants”

The utterance “the heart wants what it wants” is not in fact identical to the knowledge “<3”, but speech is the best technology we had. There are better and worse theories, however, according to two criteria of efficiency:

Efficiency of storage (number of words, difficulty of remembering them)

Efficiency of transmission (the similarity in brain X and brain Y)

As I mentioned above, these criteria are often in conflict. An aphorism like “the heart wants what it wants” is very easy to store, but the effect on brain Y is more variable and unlikely to produce the effect on brain X. A fable that tells a narrative of forbidden love is harder to store, but has sufficient scaffolding to make the effect on brain Y more consistent.

The only qualitative distinction between this oral culture and academic social science until the second half of the 20th century is the invention of writing. Writing is simply a more durable form of knowledge transmission, allowing for language (and thus theoretical knowledge) to accumulate outside a given human brain. For any of that knowledge to act in the world by improving the accuracy of a prediction, however, it still has to make its way into a human brain; it has to be read.

Quantitative social science uses a specific kind of language: numbers instead of words. This language is useful for storing and transmitting certain kinds of knowledge. But it is rarely accessed directly by human eyes; as you know, it is primarily useful when it is transformed by a machine into different numbers, which are then translated into words.

The form of the social scientific manuscript is designed to contain both knowledge and information about its production. This is admittedly fuzzy distinction; the latter is in fact sociological or pedagogical knowledge, but the focal knowledge in the paper relates to a theory of human behavior. In quantitative social science manuscripts, the focal knowledge is always a theory expressed in words and is thus designed to be put into a human brain. The numbers that are used in the production in the manuscript and which comprise the manuscript are necessary scaffolding that allow the manuscript to produce the desired knowledge in the brain of the reader.

A data scientist for a dating company might write a blog post that analyzes billions of numbers, produce images representing those numbers, and conclude that people who fall in love after a first date have lower average mutual attraction scores than people who don’t fall in love after a first date. The reader can’t access the numbers directly but they are necessary to produce “<3” in the brain of the reader.

Non-Human Knowledge

Human social scientific knowledge exists in two types of places: the brains of humans and the manuscripts we produce. The current challenge, as readers of this blog know, is how to synthesize the knowledge we are accumulating. All of the practices of social science—and in particular, the language we use to encode knowledge outside of human brains—still assumes that the human brain will be the ultimate point of knowledge synthesis.

The focal knowledge of this blog post is that the ascendant paradigm in quantitative social science—the causal revolution/non-parametric statistics/post-systems empiricism/data fusion/external validity paradigm—assumes the opposite: that knowledge will ultimately be synthesized outside of the brain, by a machine.

If this is the goal, the academic manuscript is an outdated form for storing focal social scientific knowledge. We are no longer aiming to discover the correct language that will cause a human brain to store knowledge to predict human behavior; we are aiming to discover the correct numbers that will cause a machine to store knowledge to predict human behavior. This is already the case with meta-analysis, and fields (like medicine) where meta-analyses are more established are already more likely to emphasize the commensurability of the numbers produced as part of different constituent studies.

Verbal language and numbers can be easily transformed back and forth, of course. But they perform differently on the two efficiency criteria depending on the recipient of the focal knowledge. Numbers are less efficient at transmitting knowledge to humans’ language is less efficient at transmitting knowledge to machines.

It is helpful to re-tell the narrative about the development of social scientific knowledge practices from a machine perspective. Machines are powerful but fragile; unlike human brains, they don’t worry about their own survival. The goal of machine learning is simply to detect patterns in numbers.

Machines are capable of taking in massive amounts of sensory information without being overwhelmed, and they can store it and access it without needing to pre-process it with perceptual categories. They can also easily aggregate immediate sensory information gathered from a much wider range of locations, both geographic and temporal.

A second-by-second GPS tracker on every human would provide an impossibly large and wide time series, but still one that a very powerful machine could analyze and use for prediction. Merge the location data with biographical, demographic, browsing history, and credit scores, and the machine gets even better at prediction. One of the things it could predict is when human body K goes from spending 8 of every 24 hours motionless far away from any other body to spending 8 of every 24 hours motionless next to body L. One of the parameters with positive weight in this prediction is that the distance between the data vector K and the data vector L is in the 60-75th percentile range of all such distances between vectors representing geographically close bodies.

In other words, the machine has the synthesized the data and generated the knowledge “<3”.

What…?

This is all premised on the fact that the ultimate synthesis will happen outside of a human brain. This idea is admittedly shocking, but again: this is the ascendant paradigm in quantitative social science, even if the practitioners I talk to rarely think in these terms.

The highest-profile and -level methodological discussion related to this is the recent discourse about RCTs. Central to this debate is a paper by Angus Deaton and Nancy Cartwright (and the associated rebuttals; Judea Pearl’s is most useful for my argument). Deaton and Cartwright hold to the interpretivist line that social science necessarily involves understanding “why things work,” that RCTs are useful but only as a part of a cumulative program that includes a variety of methods, and that the ultimate knowledge synthesizer will be a human brain reading academic manuscripts.

Pearl’s cited “Data Fusion” is analogous to the formalized treatments of “external validity” that I’ve discussed before, although even more abstract. The idea is that humans run a large number of RCTs that then produce both numbers and academic papers. After about another decade of this research programme, we will give the numbers to a machine. The machine will then tell us some other numbers; a prediction about what will happen if we run another RCT. If this prediction is accurate (more accurate than a prediction by a human knowledge synthesizer), the research programme will be a success.

Honestly: I’m on the fence. This is a fascinating time to a be a social scientist; the epistemic ground is shifting and our tools are becoming dramatically more powerful. So I’ll wait and see.

However, given the premise of Data Fusion and the non-human knowledge synthesizer, we should radically reform social scientific practices and institutions. I’ve discussed the reform of the structure of the academic “firm” and have co-founded a journal focused on quantitative description, and discussed how the proper role of meta-scientific methodology is to improve aggregate knowledge production, not improve the knowledge contained within a given academic paper.

This ponderous post extends that final point: the “received form” of social scientific knowledge, the journal article, is itself incompatible with the present paradigm.

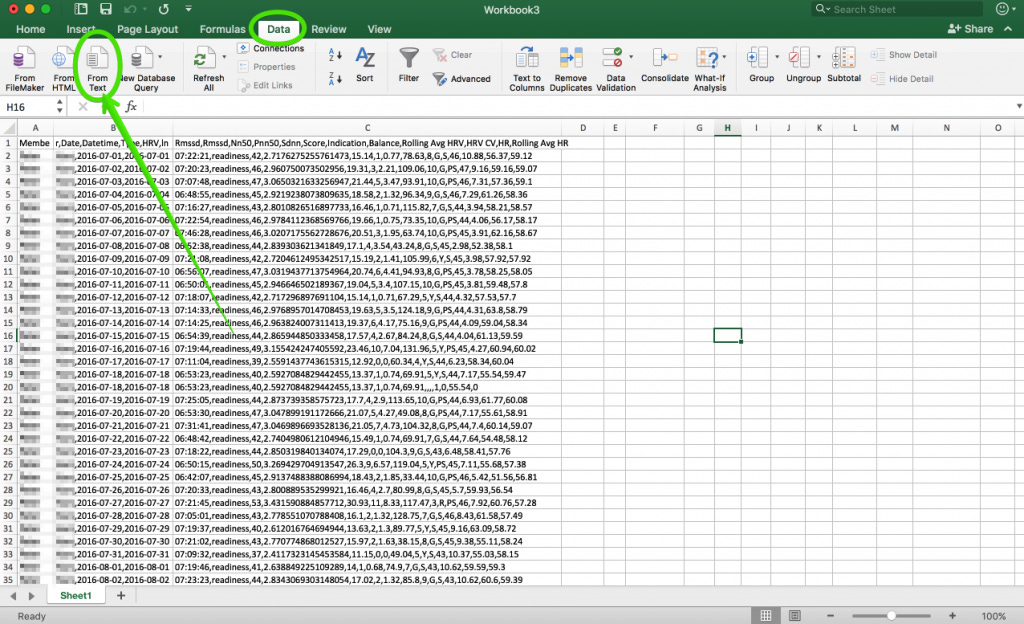

And this is why I don’t think Peer, Orr and Coppock go far enough. The focus on extending the reproducibility of a single journal article serves to reify the journal article as a form. Given the goal of a non-human knowledge synthesizer, this is a mistake. Instead, we need to emphasize the legibility of our knowledge production to the machine. They are correct that preserving knowledge is essential, but we can do so much more efficiently by eschewing the non-focal knowledge encoded in human language in our academic papers that takes up so much of our time. The focal knowledge, in this paradigm, is not located in the pdf but instead in the csv (in terms of raw data and neural network weights).

This plan, of course, causes a host of sociological problems: human brains have to evaluate the knowledge produced for purposes of careers etc; we would have to rebuild pedagogy from the ground up, with limited capacity to transfer fresh knowledge from machines to untrained student minds; what I’m describing sounds like an insane millenarian cult that the public might rightly seek to destroy.

My hope is to prompt more discussion along these lines with social scientists who are more fully invested in the new paradigm than I; I believe that I’m describing an important problem, and while I don’t have the answer, we will need an answer for this programme to succeed.

I have a lot of questions

I started collecting caveats to the main narrative down here but there were like a million of them; I know that the damn thing is incomplete I just wanted to keep it short and it’s still too long!

1 Isn’t this just meta-analysis?

A more moderate approach would indeed be to expand (in both scale and sophistication) the current use of meta-analysis. This is what many practitioners who haven’t embraced Pearl’s Data Fusion seem to be developing towards. This might work for some questions, but I’m still concerned that Nancy Cartwright is right: there’s always too much work being done by the word “similar.”

That is: each study/experiment/paper that is the unit of analysis of a meta-analysis is unique. There are uncountably many tiny ways in which they differ from each other. The only reason they can be lumped together is that the researcher has decided they are “similar” enough. At present, for field experiment, the variance in the results of “similar” studies is so large that meta-analysis of even the most-studied questions is barely better than random at predicted the sign of the effect of a given intervention.

Practitioners are aware of this problem, of course, and there are promising developments towards convergence and standardization. The humanist in me is rooting for this approach to succeed; I’m just not sure we can pull it off.

🤖 🤖 🤖 🤖 🤖 🤖 🤖 🤖 🤖 🤖 🤖 🤖 🤖 🤖 🤖 🤖 🤖 🤖 🤖 🤖