Discover more from Never Met a Science

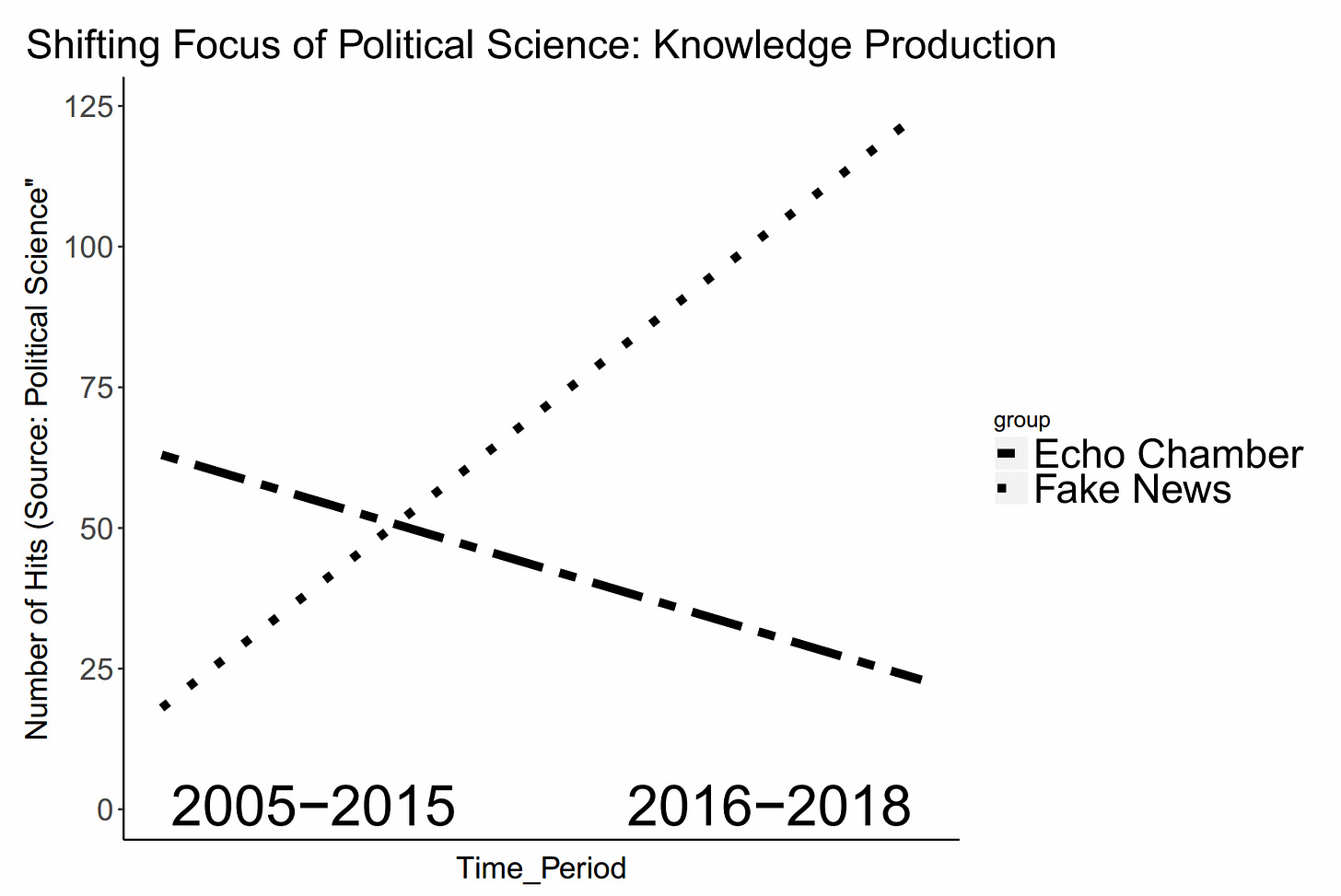

We are in the midst of the next great topical shift in the study of digital media: the Capitol insurrection was a massive shock to our sense of normalcy, and it seems undeniably true that digital media was a necessary (if not sufficient) condition for it. So my guess is that we’ll see the peak of “Fake News” research in a few years when the backlog of papers gets published, but that we’re about to see a takeoff of “right-wing extremism” and “digital radicalization” research.

My first question: how is social science supposed to work — at all — under these conditions? Social science is a cumulative practice; no single paper can explain this messy, complex world, but the premise is that we can synthesize the knowledge from a host of papers over time.

I’ve argued that at present social science is trying to study too many things at once; with the current aggregate supply of social scientist-hours, we need to dramatically limit the scope of our inquiry to make progress on anything.

But if we’re just going to switch topics every four years (or more quickly, if things continue to accelerate)…the entire enterprise is farcical. The farce is compounded by the glacial slowness and inherent conservatism of academic publishing, as well as the trend-chasing incentives created by career advancement determined by media coverage and grants won.

My work on temporal validity takes up the call by Hofman, Sharma and Watts to define the theoretical limits of knowledge of complex systems. I believe (but have not yet shown rigorously) that many of the kinds of causal questions we think we can answer are in fact beyond this limit, and that we can produce more knowledge with a more epistemically humble approach. Still, I share the desire for this kind of knowledge and would applaud real effort to produce it.

However, at present, I can’t escape the feeling that we’re not even trying.

Trying would mean:

Formalizing and making coherent the questions we ask, instead of bumbling around in a world of incoherency.

Making falsifiable predictions about the future to vet the knowledge we claim to create.

Adapting the institutions of knowledge production to reflect the contemporary world.

The last point is the topic of this post, and specifically the institution of the academic paper as the focal unit of knowledge production. There are many limitations, but most relevant to the topic of digital media is time.

In the field of political communication, there are thousands of “lab” experiments (whether in person or on the internet) in which subjects are presented with one or more media stimuli and respond somehow, either through choosing which media to consume or answering survey questions. I’ve done many of these experiments myself. They tend to ask questions about what kinds of media have what kinds of effects on what kinds of people….holding the duration of the encounter constant.

Recent innovations in media effects research have improved their realism immensely, by letting subjects select which media to consume and by making the experience more natural. However, time spent with media remains stubbornly fixed in a 15-minute media effects experiment.

There are a great many experiments finding some sweet, sweet ***’s between the effects of different types of (15 minutes of) media on different types of people. In terms of effect magnitudes, however, it’s very rare to see differences between types of people or types of media larger than one being twice as large as another—with smaller effects the norm. However, in the light of advances in research design (power analyses, pre-analysis plans, registered reports), it is likely that the true range of effect sizes is even smaller than the published literature suggests.

The most succinct summary of this view is the title of Alex Coppock’s dissertation: “Positive, Small, Homogeneous, and Durable.” In other words: one unit of media slightly changes the minds of everyone a little bit.

I’m inclined to agree. “Lab” style media effects made sense when Carl Hovland refined them to their modern form, for studying propaganda movies for US troops in WWII. Hovland, in the 1940s, thinks remarkably deeply about the problems that plague media effects studies today. However, through a combination of his power over research subjects Because Army and the fact that there was so little film media that could possibly be consumed, his research was temporally coextensive with the real media consumption context: he made people watch a whole film to see the effect of that film. Although never compromising the internal validity of a given media effects study, the explosion of the supply of media and contexts for consuming has rendered media effects studies temporally non-representative of ecological media consumption.

So why is this the dominant research design for understanding media? Causality is hard! Media effects research that effectively exploits natural experiments (from the channel ordering of Fox News to the semi-random rollout of Fox News to the semi-random rollout of 3G) is great and has been influential, but the world is stingy with its as-if randomizations.

Indeed: causality is too hard. The causal revolution has, in practice, created a hierarchy where any local, ephemeral causal knowledge is emphasized and valorized more than general, extensive, time-series non-causal knowledge.

The Causal (Post-Systems) Empiricists are at the same point that the Rational Choice Theorists were fifty years ago. To paraphrase Green and Shapiro (1994): “We’ve discovered the true technology of social knowledge production; of course we haven’t solved everything yet, but let us run the institutions for thirty years and we’ll be on the moon.”

In general, I’m on board. Field experiments, in particular, can be an incredible site of knowledge generation, and they are being scaled up considerably. God bless 🙏

For media effects, though, we have the causal model pretty much sorted. People are what they do; media consumption changes them, generally, according to Coppock’s formulation above: all media effects are of equal magnitude on everyone. Our task is thus to measure how people spend their time, what media they consume.

Media (both digital and broadcast) undeniably played a decisive role in the events of January 6. I believe that a quantitative social science of media studies that emphasized description would have been far more useful than the current apparatus for generating knowledge that could have been used to anticipate this insurrection.

So why was this year so tumultuous? Here are a few descriptive graphs I’ve been preparing for my book and grabbing from the internet.

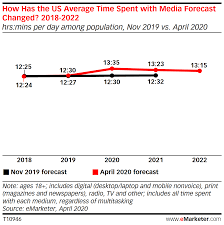

Hours of television consumed per week

Pandemic Media Consumption

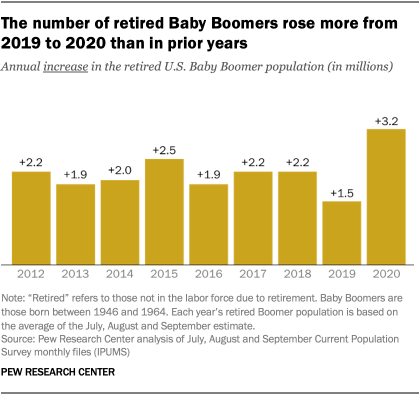

Pandemic Retirements

Cable News Viewership

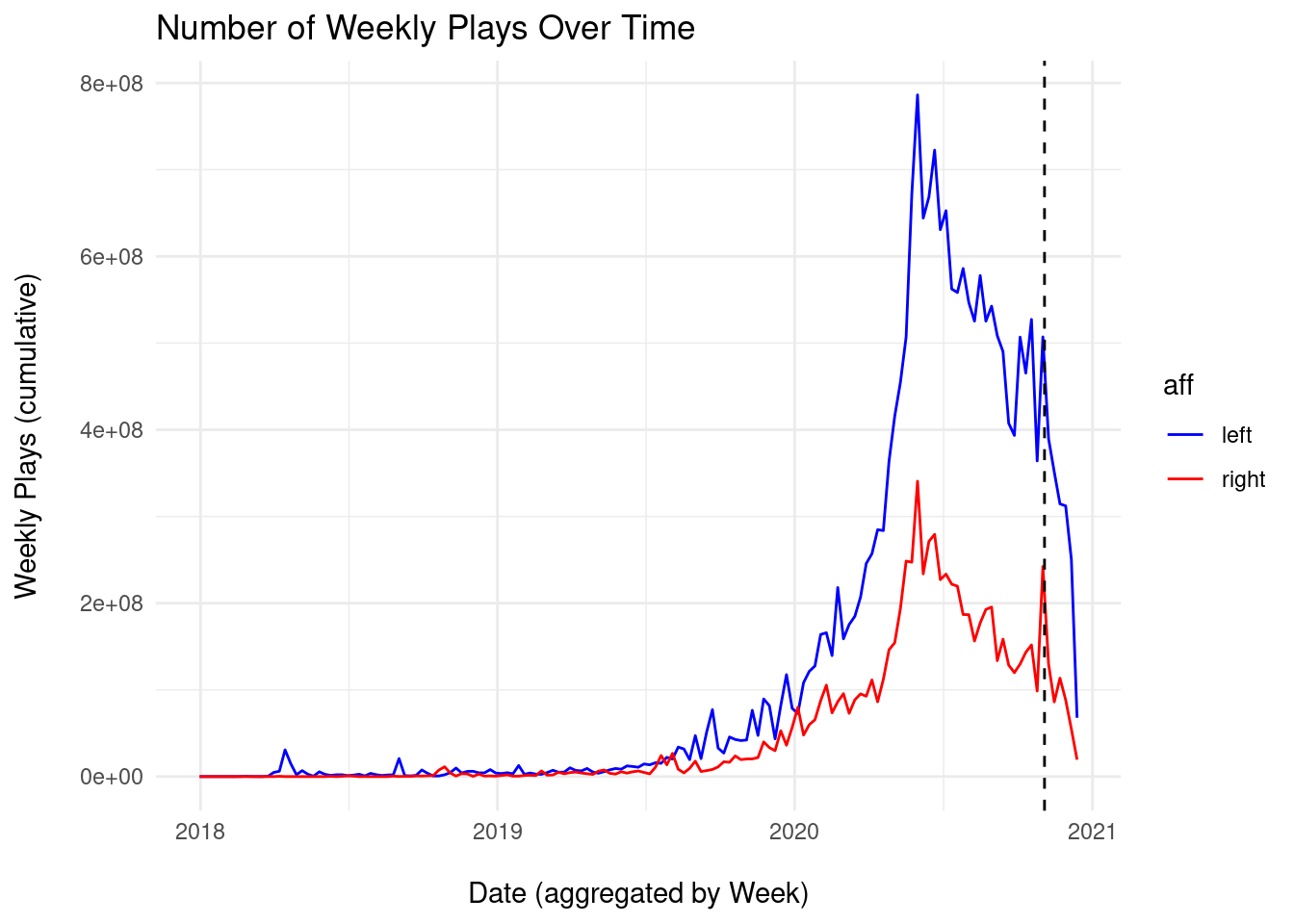

Number of Political TikTok Views

Overall: the pandemic and pre-existing trends meant that people consumed an unbelievable amount of media this year. Audience measurement for legacy media is still going strong, but we have not yet developed a comprehensive approach (and the accompanying institutions) for audience measurement of digital platforms. The final graph, from TikTok, is from my own research with Fabio Votta and Benjamin Guinaudeau. I want the same thing for every aspect of digital media, especially the major platforms.

We were treated to a year of Kevin Roose tweeting the top engaged-with link posts on Facebook; why not do this for the top THOUSAND links, and look at the aggregate trends in viewership? How did the average number of likes, comments and RTs on Trump’s tweets vary this year? How many mentions of “January 6” were there on Parler in relation to other dates?

This is the point of the Journal of Quantitative Description: Digital Media. These are legitimate and in fact necessary questions for a contemporary social science of digital politics. In the long run, I aspire to the creation of descriptive datasets and real-time visualizations that are not constrained by the “paper” as the unit of social science knowledge. But for now, given the centrality of the “paper” to the sociology of academia, the JQD: DM offers a modest step towards legitimizing these research questions.

WE GET TO DECIDE WHAT SOCIAL SCIENCE IS

I hope that quantitative scholars of digital media decide to make our scientific practices better align with the nature of our subject and the exigencies of our political situation.

I do think that some media effect heterogeneity exists. The effect of an article written in Korean will be different on myself and South Korean rap god Psy. I believe that “digital literacy” is like language literacy in this respect, so that the effect of digital media is heterogeneous in subject digital literacy. But this remains an open question. Furthermore, the world of digital media is constantly evolving, and I think it is eminently possible that a new development might have outsized effects, hence my research on Deepfakes.

I spend *so much* of my time trying to track down descriptive trends in media consumption by different types of people on different platforms over time. Other than the blessed angels at the Pew Research Center and whatever “Statista” is, there is frustratingly little of this information.

Subscribe to Never Met a Science

Political Communication, Social Science Methodology, and how the internet intersects each