Discover more from Never Met a Science

If we're taking Causal Empiricism seriously (and if not, I think we need an alternative positivist framework for deploying the knowledge currently being produced by quantitative political science) the most efficient marginal allocation of our resources is to generate quantitative descriptive knowledge. The are three reasons for this:

Transportability requires rich covariate adjustment.

Causal knowledge is currently too expensive.

(The third issue: we don’t know what to causal questions to ask. I’ll have a whole post on this one next week.)

I've attempted to discuss this problem in the context of "temporal validity" when the knowledge being generated exists in a rapidly changing environment like (I argue) digital communication. I now think that's a separate and less general point (though still a very important one).

The primary causes of our current state of insufficient quantitative description are a reliance on other knowledge actors and the increasing complexity of the world. The institutions of academic social science were developed alongside other institutions (the media and the government) to which we offloaded some of the key components of the knowledge production process. The stagnation or relative decline in capacity of those actors means that we need to adjust. Also, the social world has become dramatically more complex during the lifespan of many living social scientists. We can no longer take our knowledge of what is for granted from our experience of the world. In both cases, we need more quantitative description.

Transportability requires rich covariate adjustment.

Taking the casual empiricist framework as given, the main finding from the recent string of studies on exportability/transportability/external validity is that variance is dominant. The only way to decrease this variance is through richer covariate adjustment. The minimum possible variance is in fact larger than standard estimates account for (anyone with a prediction: are we taking an L on this year or are we just putting an asterisk in the time series for 2020 and say it doesn't count?), but even if I'm wrong, at the current margin, the best way to increase our capacity to predict a given causal effect is not to create more causal knowledge but rather to increase the dimensions along which we can adjust the causal knowledge we already have.

The current experimentalist paradigm is explicitly free riding on the quantitative descriptive knowledge produced by other institutions. Arnold Ventures, a social science philanthropic institution that has financed some of the major efforts in social science reform (including the Open Science Foundation and DeclareDesign), includes the following instructions for their two most recentRequests for Proposals for researchers to conduct RCTs:

We encourage the use of administrative data (e.g., arrest records, state employment and earnings data) to measure key study outcomes, wherever feasible, in lieu of more expensive original data collection.

and

To reduce study costs, we encourage the use of administrative data (e.g., wage records, state educational test scores, criminal arrest records) to measure key study outcomes, wherever feasible, in lieu of more expensive original data collection.

Offloading the task of DV measurement is an extreme example, but any attempt to generalize knowledge to a target context requires knowledge of that context. For many applications of interest to Political Science, we rely on data from governments or NGOs for some portion of the analysis or covariate adjustment. In other cases, we rely on survey data from longstanding surveys.

The ANES is quantitative description, and it is (at least for American political behavior) the most important resource we have. Major recent methodological contributions, including Adam Bonica’s DIME database and Pablo Barberá’s “tweetscores” model, are exercises in quantitative description and modelling. Longstanding Political Science interest in ideological scaling has allowed these contributions to receive the acclaim they deserve, but I think it is helpful to talk about them through the lens of quantitative description, to give the latter its due. The existence of this knowledge empowers a host of causal research designs and makes the knowledge thereby gained to be transported into the future target context.

Furthermore, there are new kinds of covariate information that social science has deemed to be important that could not have been measured in the past: novel covariates need to be conceived of before they can be measured and exist in data.

My preferred example is the concept of “digital literacy.” Research on the topic begins with Sociologist Eszther Hargittai research about the “second digital divide"---the inequality in internet skills among people with internet access. In general, people get better at using the internet the more they use it, and the more they use it with feedback on their performance to enable learning. The topic of heterogeneity of internet experiences hit the mainstream in the wake of the 2016 US presidential election in the context of F*ke News:

Using web-tracking software, Guess, Nagler, and Tucker (2018) report that “Users over 65 shared nearly 7 times as many articles from fake news domains as the youngest age group.” during the 2016 US Presidential election.

Barbera (2018) finds that people over 65 shared roughly 4.5 as many fake news stories on Twitter as people 18 to 24.

Grinberg et al. (2019) finds that people over 65 were exposed to between 1.5 and 3 times as much fake news on Twitter as the youngest people; the slope depends heavily on partisanship.

All of the studies are quantitative description, but the only reason so much energy and resources were devoted to the topic (and why this kind of “merely” descriptive research was published in top outlets) was the public urgency surrounding the topic.

These trends are correlated with age, but it is unlikely that age per se is driving these effects. Instead, Andy Guess and I argue that “digital literacy” is the key theoretical moderator.

Assuming we’re right (and the problem is even worse if we’re wrong!), the process of theorizing the existence of this moderator and developing a survey instrument to measure it took Political Science decades. If we want to generalize studies about online political communication to the future, we might need to measure the digital literacy of the subjects in previous studies; obviously, this is now impossible.

If more effort had been devoted to quantitative description of people’s web habits at scale (or if more effort had been devoted to qualitative description, involving deep understanding of a small number of people’s web habits), this key heterogeneity might have been discovered earlier, which would have allowed us to have the necessary covariate knowledge from the causal studies produced in the meantime.

Causal knowledge is too expensive.

The causal revolution has demonstrated convincingly how difficult it is to produce causal knowledge. The response has been to search for historical accidents or to develop sufficient institutional capacity to execute large-scale, high-quality experiments.

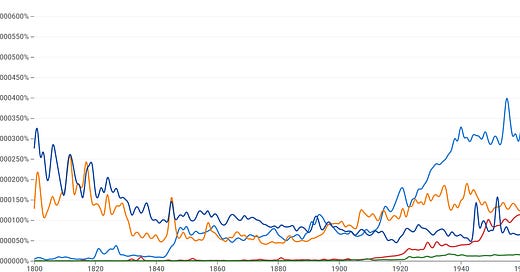

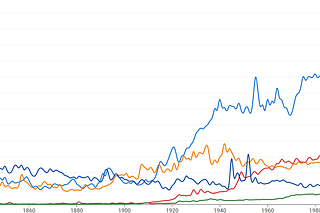

The causal revolution is happening alongside (and largely independent of) the fact that the social world is becoming more complex. The number of humans has exploded over the past century, so much so that perhaps 7 percent of all humans who have ever lived are currently alive. Communication technology and economic globalism have also dramatically increased the number of connections between people. These trends are multiplicative in producing complexity.

Meteorologist William B. Gail makes a compelling and tragic case for the possibility of “peak knowledge” in the context of our “practical understanding of the planet”:

Historians of the next century will grasp the importance of this decline in our ability to predict the future. They may mark the coming decades of this century as the period during which humanity, despite rapid technological and scientific advances, achieved “peak knowledge” about the planet it occupies.

Climate change is rendering much of our accumulated knowledge obsolete; “knowledge decays,” as I’ve tried to argue. The goal of research on external validity is to allow us to generalize the local knowledge we have acquired. But global weather patterns are fundamentally complex; we cannot adjust or weight our way into a prediction of an emergent phenomenon when all of the parameters with which we might do so are also in flux.

As Earth’s warming stabilizes, new patterns begin to appear. At first, they are confusing and hard to identify. Scientists note similarities to Earth’s emergence from the last ice age. These new patterns need many years — sometimes decades or more — to reveal themselves fully, even when monitored with our sophisticated observing systems. Until then, farmers will struggle to reliably predict new seasonal patterns and regularly plant the wrong crops. Early signs of major drought will go unrecognized, so costly irrigation will be built in the wrong places. Disruptive societal impacts will be widespread.

But it may also be the case that we have reached peak knowledge in a broader sense. The rate of production of social scientific knowledge has increased, but not enough to account for the increased complexity of our subject. If in fact we are in such a state (one in which a global force is redefining every social and economic relationship based on information over a period of only a few decades) as social scientists in 2020, expensive knowledge of local causal relationships gets us no closer to an application of that knowledge in the future.

Instead, we can devote our energies to understanding the world as it is.

As time passes, this research agenda allows us to know how the world was and is. Quantitative description is cheap, and much of the cost is fixed. In contrast, causal knowledge is expensive and much of the cost is marginal. The marginal cost of updating the DIME database and the DW-NOMINATE scores for each session of Congress is much lower than the fixed cost of creating those models in the first place. In contrast, the marginal cost of re-running a Twitter RCT every time Twitter’s userbase or platform policies change is very high.

In a complex and rapidly changing world, social science needs as many time series as possible. As someone who has spent a lot of time studying Twitter, there is a glaring hole at the center of this literature.

I would trade *almost all of the research ever published about Twitter* for a high-quality representative panel survey of Twitter users with trace data from their accounts matched with their survey responses. For a fraction of the total time and $$ spent studying Twitter, we could have had a decade of the ANES matched with “Twitter Comscores” (to measure media consumption) and matched with Twitter participation (a behavior so new and important that there is no analog analogue)—and it would have been global.

Instead we have a tidal wave of local, ephemeral causal findings that we don’t know how to aggregate or apply.

The previously (briefly) legible world of a knowable political media has overstayed its welcome in our collective consciousness. In the living memory of the most powerful generation in America today, it was possible for a college-educated, motivated middle-class individual to keep abreast of a significant minority (if not an outright majority) of political media.

Today, this is laughably false. I spend about an hour a day on Twitter getting news; many Baby Boomers spend several hours watching cable news; there is a proliferation of lightweight online political publications; there are millions of hours of political discussion on YouTube uploaded every day, and a single viewer can keep on top of the output of only a handful of the thousands of political "creators"; don't even get me started on TikTok. More broadly, social media means that people see political media created by their friends and family in an unbelievable variety.

As a result, as the social science disciplines matured in the era of "peak knowledge" of what political media *is*, they came to undervalue descriptive analysis. There exists a tradition of *qualitative* description, which involves an in-depth understanding of a small number of cases or individuals. But within the tradition of quantitative political science, there is insufficient effort devoted to pure description.

Instead, we assume that knowledge actors external to the process of academic knowledge production will fulfill our quantitative descriptive needs. Either from the passive experience of the actors involved or journalistic accounts, social scientific *agenda setting* has taken place implicitly. Taking this base knowledge of the world for granted has allowed us to prioritize the production of causal knowledge,

But this is no longer tenable. Our efforts at causal knowledge have become increasingly sophisticated, and study designs unsuited to causal knowledge production have been de-legitimized. It is fantastic that non-causal evidence is being driven out of the realm of causal knowledge production, but the sociological processes that allowed this to happen have also ignored the comparative costs and benefits of the causal knowledge that is produced.

I saw that Andrew Gelman posted on this very topic this morning. He makes the following point:

At the individual level, descriptive work is influential and it’s celebrated. Lots of the debates in macroeconomics—Reinhart and Rogoff, Piketty and Saez, Phillips curve, Chetty, etc. etc.—center on descriptive work and descriptive claims, and it’s clear that these are relevant, if sometimes only indirectly, to policy. But in general terms, it seems to me that social scientists get so worked up regarding causal identification.

These are all examples from Econ (but Gelman of course does excellent descriptive work in Political Science), but the general point comports with my examples above. There are many individual works of quantitative description that have been lauded as highly influential.

But we don’t have the institutions that broadly support quantitative descriptive work. It’s barely discussed in graduate methods (from what I know of the topic—I’d be happy to be proven wrong); there is little work in political methodology on quantitative description per se (again, there are major exceptions: network analysis, text-as-data, record linkages, ideological scaling—but this is not discussed under the proper label of description); and reviewers and editors don’t prioritize it.

I recently received the following comment from a reviewer in a thoughtful and positive review:

The use of "descriptive" as an epithet or diminutive in the discipline is far too common, but even so the analysis here certainly goes beyond "mere" descriptive research.

I prefer to defend quantitative description on its own terms. Raising the status of this kind of research will both enhance the usefulness of causal research and allow a broader number of researchers to make durable contributions to the social scientific knowledge production process.

But this is only possible if we reform the coin of the realm: peer-reviewed publications. We need a Journal of Quantitative Description.

Subscribe to Never Met a Science

Political Communication, Social Science Methodology, and how the internet intersects each

Kevin, I have been saying this for years also! Post-tenure let's do it. Another benefit: it's an easier ask from platforms, which is where we desperately need data (at least in the political communication world). I think FB, the NYT, YouTube, etc would be much more willing to release aggregate descriptive information than the type of (detailed observational or experimental) data that researchers use to make causal(ish) arguments.

How do I sign my name to this? I don't see a "what is your name" option on Medium, which makes this not QUITE like the blogs of yore. But here we go: @emilythorson