Discover more from Never Met a Science

One of my friends is quite confident about the scope of empirical Political Science. There are two questions, which are maybe the same question:

When does violent conflict happen?

How do institutions map preferences onto outcomes?

If you’re someone with similarly strong a priori commitments, you might not find this post very interesting.

I study political communication, which I see as a frequently changing topic. There are certain elements of the topic (which branch into political psychology or physiology) that are relatively unchanging; we can always learn more about the part of the communication chain that happens when our receptors encounter media objects.

Most of my work is interested in the more contingent side. The central question is: what kind of political media is produced / consumed? I think this is where all the action is; the explosion in supply of media (and a smaller but still massive increase in the aggregate consumption of media) is the primary story of the past decades.

I’ll describe my ideal empirical research project in another post: a complete map of all the media consumed by a representative sample of Earthlings, over time, forever. This would be useful for PolComm (and countless other disciplines) in achieving their goals. But it’s also a useful case study for discussing agenda setting and social science.

At the 2020 NYU Center for Experimental Social Science conference (in early March….), I saw an excellent presentation by Joshua Kertzer on the tradeoffs in the design of vignettes for survey experiments. The presentation was unusually candid in discussing the motivation for the projects: reviewers sometimes complain about vignettes that are either too specific or too vague, but we don’t have any scientific evidence about how this actually affects the process.

The intuitions of reviewers (and editors) are a key input to the social scientific process, but they are never studied explicitly. The discipline of political methodology functions reasonably well here: if there is an open disagreement about some question of research design or estimation, we have a well-defined process for adjudicating between different options. The process would be sped up if we simply aggregated the opinions of political scientists; the philosophers do this, and while the results are hilarious to the layperson (take the apparently quite contentious question of “Teletransporter (new matter): survival or death?”

Accept or lean toward: survival 337 / 931 (36.2%)

Other 304 / 931 (32.7%)

Accept or lean toward: death 290 / 931 (31.1%)

¯\_(ツ)_/¯ ),

they serve the useful function of defining which questions are mostly settled in the field.

We do not, however, have an analogous process for determining whether a topic is sufficiently important, and especially “for a general interest journal such as the Journal of Political Science Review,” or if it would be better suited for a subfield journal.

Sometimes I think the best way to improve my publication chances with research on the latest platform (I’ve got a paper on TikTok in the pipeline!) is to buy ads on social media with a hyper-targeted list of potential reviewers. Flooding their timelines with sponsored posts about how TikTok is “Cable News for Young People” is likely (from agenda-setting theory) to at least make them think that the topic is sufficiently important to merit research.

But this knowledge, these intuitions are created somehow, even in the absence of self-serving ad buys. There’s really only two possible pathways: personal experience and the media. This is complicated somewhat when the area of inquiry is media itself.

Political Scientists studying the US are radically non-representative of the US population. We’re much better educated, more interested in politics, and have the capacity to consume massive amounts of political media. There is widespread recognition that we need to remind ourselves of these baseline facts—ever read Converse 1964?—but it takes active effort to study ourselves in order to study the world.

For less purely positivist disciplines, this insight is about as revelatory as the idea that politicians are primarily motivated by re-election. Sociology has fully embraced the idea of “reflexivity,” my understanding of which has been best developed by reading the Wikipedia page about Pierre Bourdieu:

Bourdieu argued that the social scientist is inherently laden with biases, and only by becoming reflexively aware of those biases can the social scientists free themselves from them and aspire to the practice of an objective science. For Bourdieu, therefore, reflexivity is part of the solution, not the problem.

For as long as we’ve had institutional social science, this has been a serious problem. But I think the case of the media technology in the post-war era is unique in that the experiences of social scientists qua consumers of political media were unusually representative of the country as a whole.

It was straightforward to identify who made the broadcast media that dominated the

20th century: the expensive technology needed to produce television and radio ensured

a limited supply. These media are glamorous, and both the corporations involved in

the back end of television broadcasting and especially the personalities we tuned in to

see became prominent entities in society.

Identifying who consumed this media was only slightly more difficult. The early

days of radio relied on handwritten letters from audiences for qualitative feedback, but

penetration of the technology into the fabric of American life was rapid and constrained only by costs. Television’s adoption rate was even faster, and came at a time when market research like the Nielsen ratings system allowed for a rough but generally accurate picture of how many and what kinds of people were watching certain shows.

Understanding the dynamics of the production and consumption of these linear

broadcast technologies was thus manageable. Crucially, there are a countable and reasonably small number of different media products a given consumer could select between at a given time. The top-down, large-scale production of these media products also made them legible to the researcher, and their impact on society was rapid and widespread. This was only possible because radio and television are easy to consume: everyone is trained to listen to human speech from birth, or to listen to speech paired with a visual stimulus. Other than language barriers or the rare niche program, anyone could turn on their radio set or television and share an experience with anyone else listening.

The rise of the internet changed everything. It allows production and consumption at a scale far below this previous “mass media,” rendering it impossible for an individual to know about what everyone else is up to simply by being an avid and broad consumer of political media. This in turn makes the non-representativeness of Political Scientists’ media diets a problem for our understanding of important trends in the media sphere.

The media is how we learn about the world outside of our own experience; we rely on the media to tell us about important trends in other realms of social life. The problem is that academics and media professionals inhabit a mutually constituted and reinforcing echo chamber. Cory Robin called this the “Historovox” in the context of the Trump Presidency, and I think that something similar is afoot in the context of agenda setting and social media.

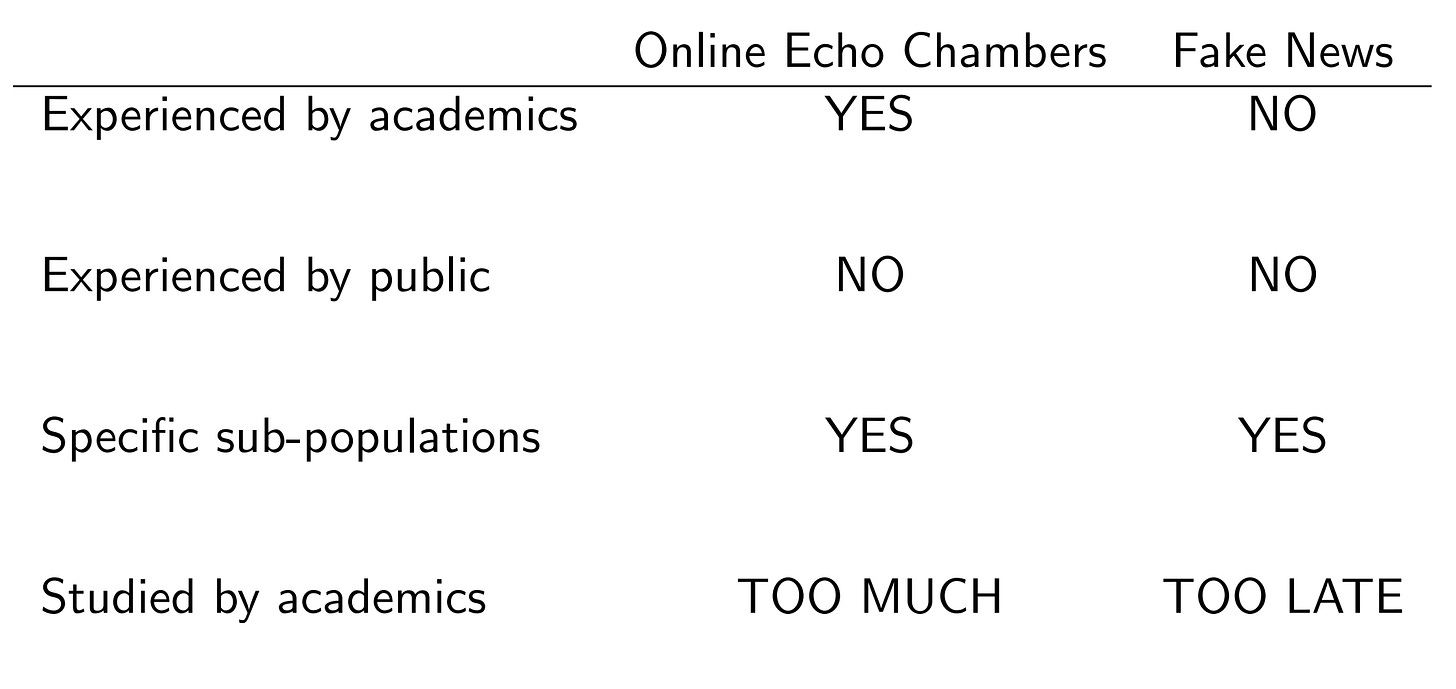

In keeping with the idea of reflexivity, the best way to understand the Historovox echo chamber is by talking about Online Echo Chambers. Considerable energy has been spent investigating the phenomenon of echo chambers. And it turns out that they don’t exist...except among users in specialized (partisan or professional) networks.

Andy Guess has done the best work on this topic. His forthcoming AJPS paper concludes that “if online “echo chambers” exist, they are a reality for relatively few people who may nonetheless wield disproportionate influence and visibility in society.” In a Knight Foundation White paper, he and co-authors didn’t have to be quite so circumspect: “public debate about news consumption has become trapped in an echo chamber about echo chambers that resists corrections from more rigorous evidence.”

(In a recent Twitter thread, he discusses the issue of defining an “echo chamber”; it can’t just be >50% congruent information, and it also can’t just be 100% congruent information. I think the best definition is the offline baseline: online echo chambers exist if the online news consumers get a higher percentage of their news from congruent sources than do offline news consumers.)

The good news is that science works: empirical consensus falsified the theory of

ubiquitous echo chambers. But recall why so much energy was devoted to the topic in the first place: academics and journalists found it plausible because it accurately described our experience. This is a huge problem because the supply of social science research is inelastic, so there are serious opportunity costs to this “Rabbit Hole.”

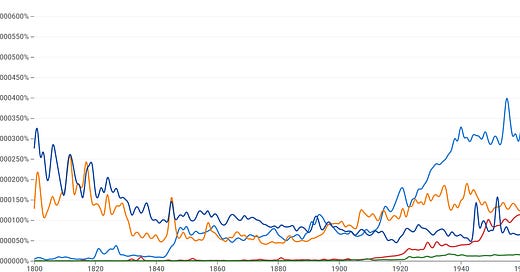

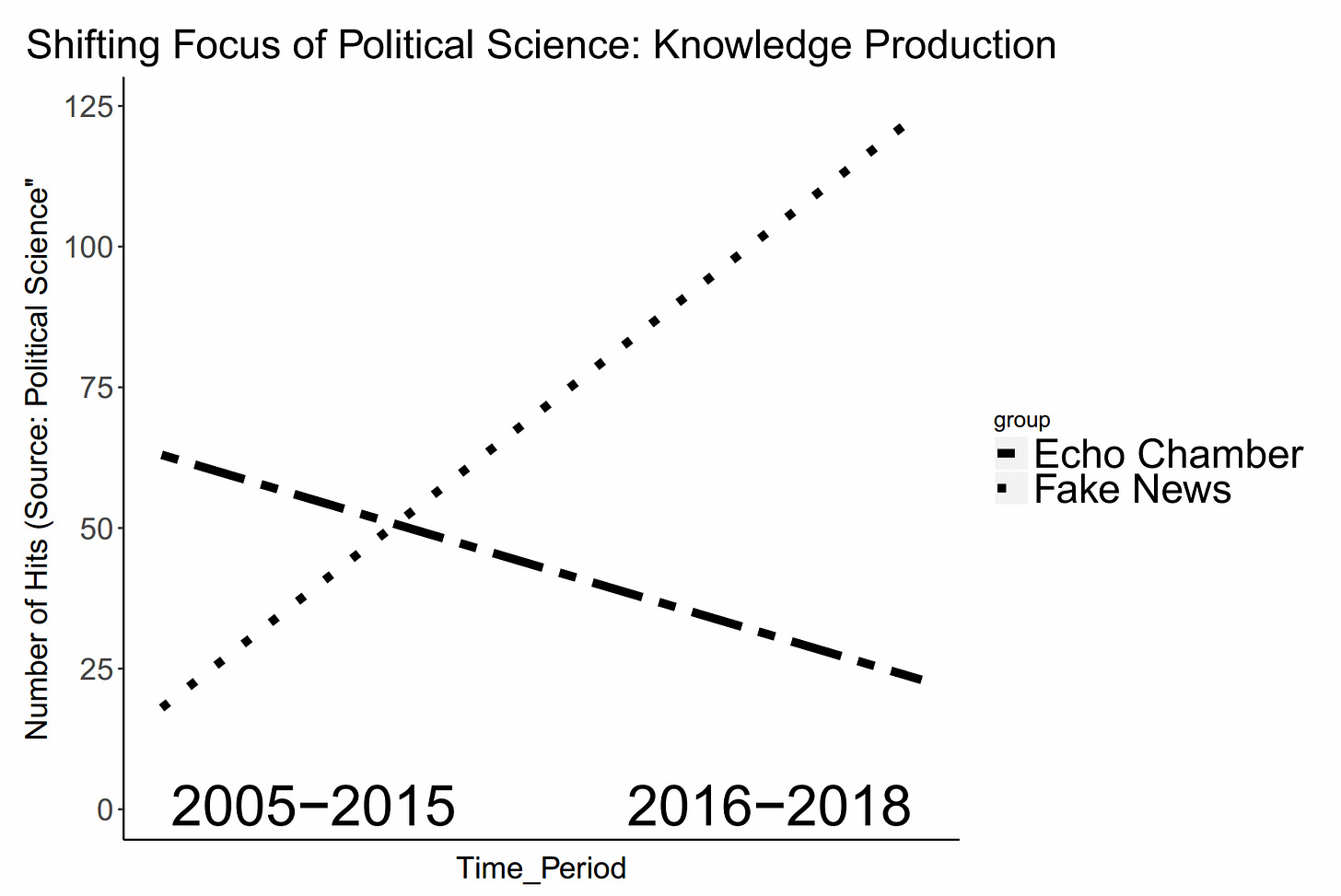

I made this graph a while ago but I bet the slope on the Fake News line is way higher now. The agenda has been re-set; friendship with Echo Chambers ended, Fake News is our best friend now.

Political Science was criticized for “not seeing Trump’s win coming”; this is mostly stupid. But we really should’ve seen Fake News coming. It’s a much more pressing problem for the modal Facebook user. But we’re both way more likely to be in an online echo chamber and way better equipped to detect Fake News.

On the other hand….the shock from 2016 may have caused an overcorrection. Studies proliferated: how do we fact-check Fake News? What psychological mechanisms predict consuming Fake News? Deep Learning + Fake News + Data Science = F1 score that eclipses published results on The Fake News Dataset!!

Everyone agreed that Fake News was An Important Question (including funding agencies, another crucial actor who fall squarely in the Historovox).

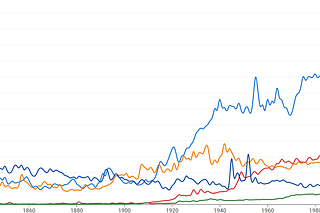

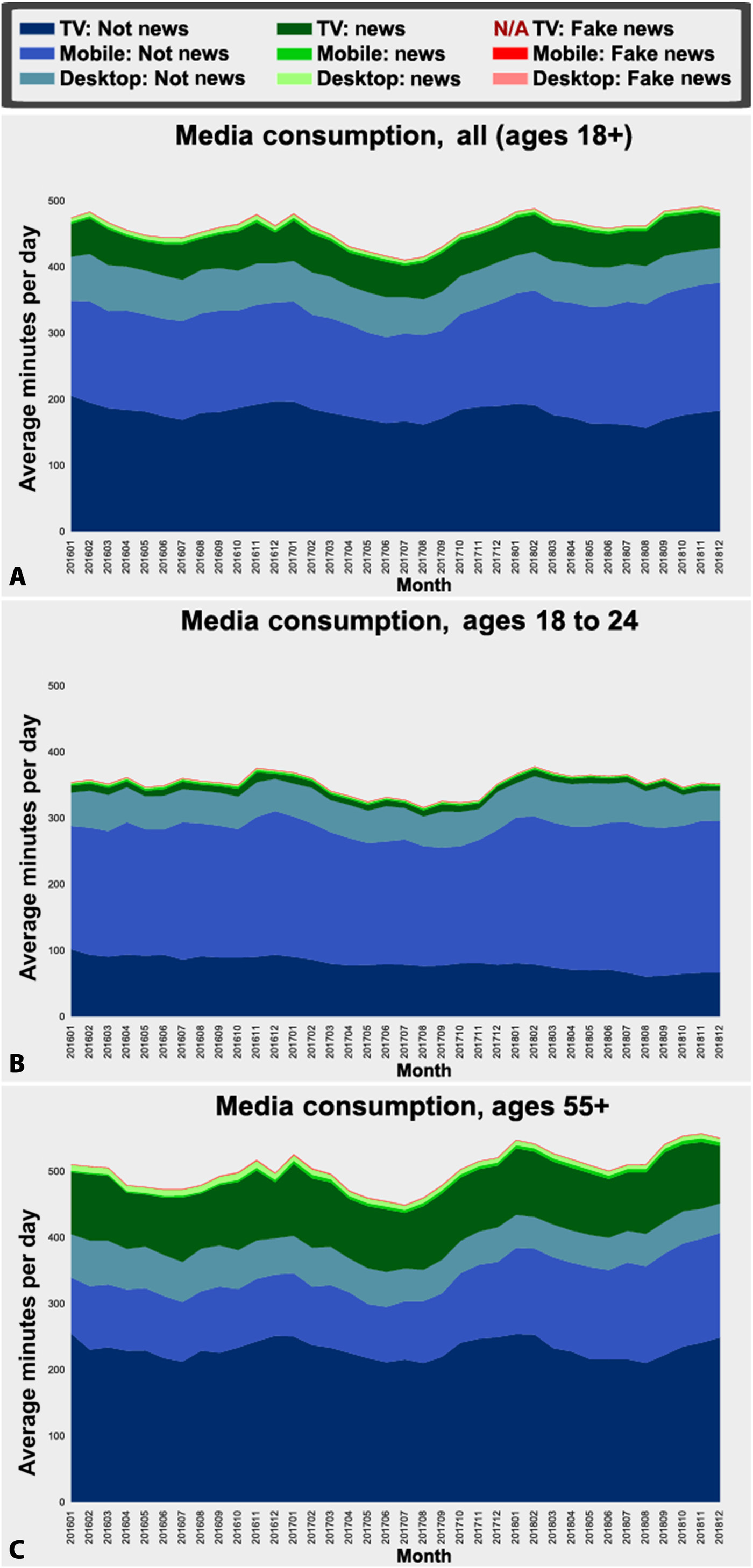

Here’s a graph from Allen et al 2020, in Science Advances. This is as close to my ideal empirical research program as I’ve ever seen. And it doesn’t exactly make me want to study Fake News.

In fact, it doesn’t make me want to study news at all—it makes me want to study TV.

(As a compromise, I’m now primarily studying televisual social media like YouTube and TikTok. Human beings are designed to communicate televisually; in the long run, we may find that societies with a broad emphasis on the written word are a historical artifact, that written communication is a specialized technology.)

Eunji Kim takes this seriously: she starts with broad, important trends in American public opinion (the persistence of the belief in the “American Dream” in the face of declining income mobility) and in American media weighted by viewership (a shocking percentage of all media consumed in this country are “rags-to-riches” reality television). She finds that the latter does in fact cause the former.

This is how social scientists should set the academic agenda: not by consulting our own experiences, and not by relying on what the media thinks is important. We need quantitative description to tell us what is going on in the world so we can try and explain or predict the dominant trends. Cable television is still constrained in its production, but other televisual media are not.

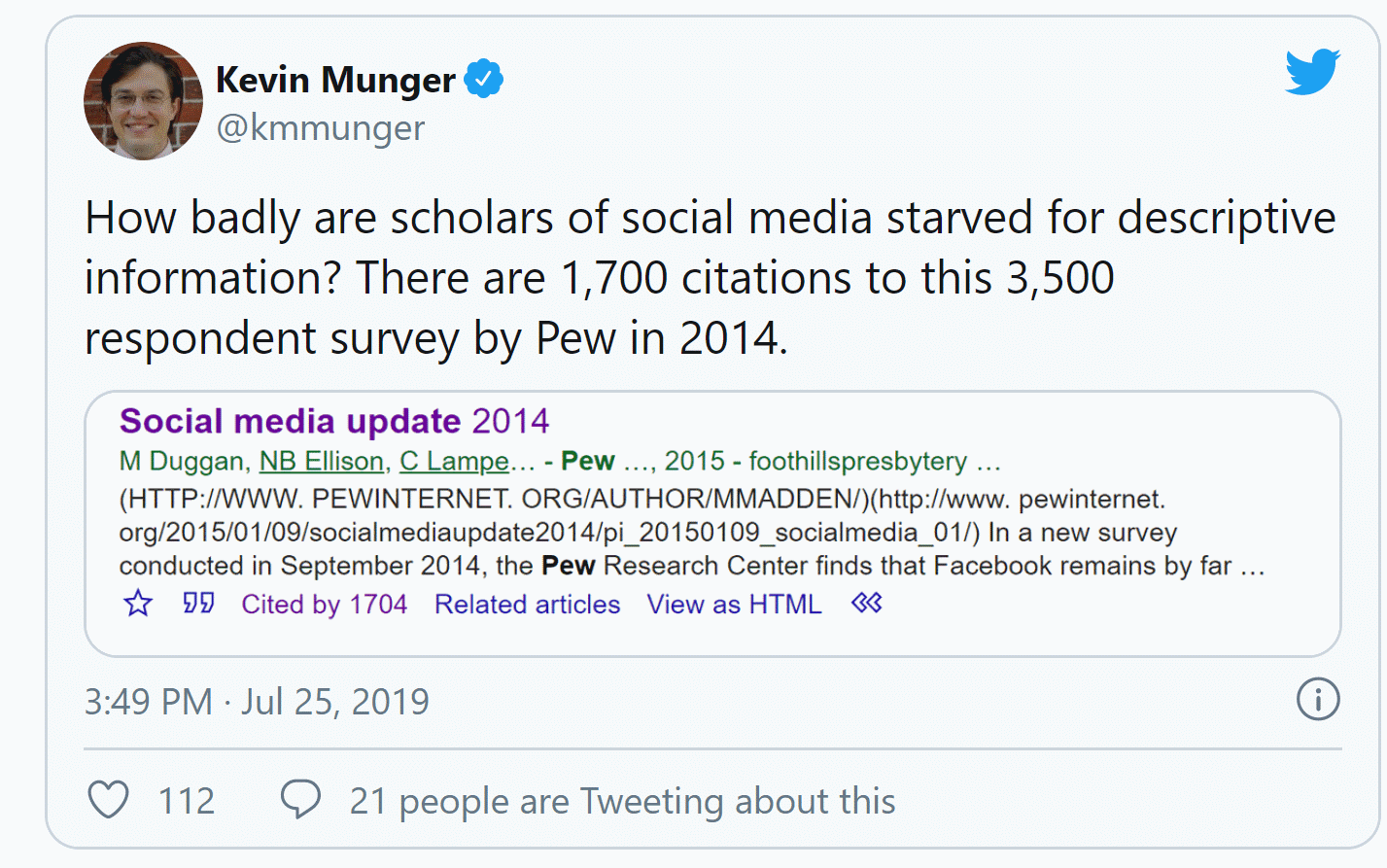

This is perhaps my most influential tweet, in that I’ve been told that someone shared a screenshot of it during a job talk.

The reason this survey has so many citations is that it fills a need for establishing the importance of a topic at the beginning of academic papers. “XX percent of Americans use social media to get news everyday”; therefore this subject is of sufficient general interest to merit publication in the JPSR.

But this one survey has so many citations because there’s basically zero competition in the quantitative description space. Entire disciplines have offloaded this task to literally just the Pew Research Center. We’re extremely lucky to have them and they do great work, but this reflects the inefficient allocation of rigor in the current social scientific knowledge production process.

Two proposals for increasing that rigor:

More quantitative description: What are people actually doing? What media are they consuming, and how much?

Survey of researchers: What topics do we think are most important? Where is there the greatest theoretical disagreement?

At present, the social science agenda is set by the intuitions of reviewers, which are themselves some idiosyncratic (edit: or worse— systematically biased based on the composition of social scientists and their relative status) combination of personal experience and media reports. The entire point of social science is to transcend these intuitions, so we need to allocate more rigor to this task. Scientists should use science to decide what to study!

Meta-point: this post is stitched together from other writing and presentations I’ve done but haven’t gotten published. This is the name of the game; the zero-marginal cost of digital production means that it’s individually rational to repeat yourself, as it’s extremely unlikely that anyone reads and remembers all your stuff. Apologies to the die-hard Munger heads out there (hi mom) but I didn’t invent the logic of contemporary communication technology. Copy+Paste go brrrrr.

Subscribe to Never Met a Science

Political Communication, Social Science Methodology, and how the internet intersects each