Discover more from Never Met a Science

How Should We Allocate Scarce Social Science Resources?

or, Is it Possible to Stop Saying "Echo Chamber"?

It's been nearly two years since I started this blog! The pandemic had disrupted the natural cycle of metascientific discussions at conferences, and I was hankerin to keep them going.

There does appear to be some demand for this kind of discursive space. Conversations sparked by this blog led to the founding (over a year ago!) of the Journal of Quantitative Description: Digital Media. I’ve gotten in touch with people from many different disciplines who are trying to make social science better but are running into fundamental issues with existing institutions, intuitions, concepts, methods. In other words, people who need Metascience.

The dialectical logic that led social science to the present metascientific crisis is in my view straightforward.

Universalism (rational choice theory, cross-country regression) ->

Localism (causal inference, RCTs) ->

External Validity (curse of dimensionality, scope conditions)

In other words: since we cannot study *everything*, and we have clawed our way towards credible technologies for studying *some things*, the most pressing question we face is *which things* to study and how to study them.

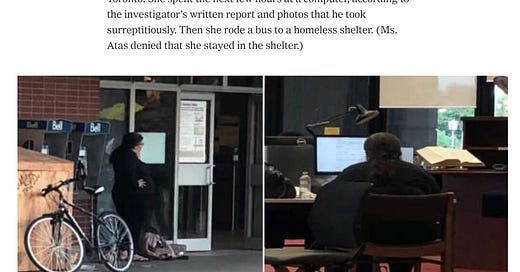

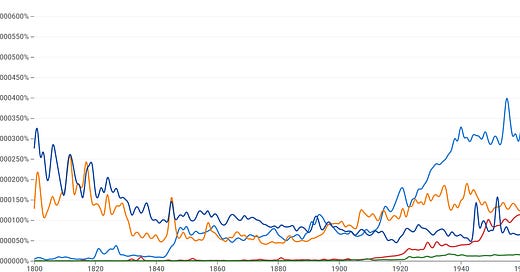

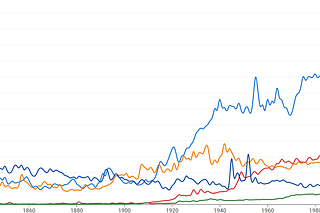

The highest-level problem social science faces is conceptual confusion. Unsurprisingly, I think this is downstream of the internet and social media. The science (and society) of the printing press was built on deep, unstated sociolinguistic processes for aligning the mental vocabularies of a large, dispersed group of humans. The present situation is undoubtedly worse; human-speed processes of meaning-making and cognition have not had time to respond to the shock of the internet.

A recent, controversial article in The Atlantic uses the Tower of Babel as a motivating metaphor. I love this metaphor. It provides a vivid illustration of contemporary conceptual confusion. The “high modernist,” centralized control of communication during the era of literacy and then broadcast media held intellectual communities together, made sure that we were speaking the same language. The democratization of communication allows everyone to use their own language—liberatory, I hope, in the long run, but conceptual chaos for now.

Theoretical throat-clearing out of the way, my goal today is to provide some clarity to the term Metascience, to promote more productive conversations.

Metascience is the study of the allocation of scarce social science resources.

This definition helps carve out conceptual space between related areas of inquiry: sociology of science, philosophy of science, scientometrics. These epistemic communities vary in terms of both the methods they deploy and their aims. According to the Wikipedia page and www.metascience.com, one definition of Metascience includes these other traditions, but I find my more precise definition more useful.

My insistence on justifying my definition in terms of “usefulness” is no accident. Talking about whether a definition or concept is “accurate” or “true” is itself not useful. This move is taken straight from the school of American Pragmatism and in particular from Richard Rorty, with whom I’ve recently become enamored. Invoking “usefulness” does, of course, beg the crucial question:

Useful for what?

This is the fundamental question of Metascience: before we can decide how best to allocate our resources, we have to a vision of what we are trying to achieve.

My definition of meta-science invokes “resources,” as a nod to the Economists ofc, but the most important resource for scientists is rigor. The peer review process involves checking that the authors’ work is sufficiently rigorous at the relevant points. The relative rigor required varies across disciplines and over time, but not in any systematic fashion. In the realm of survey experiments, for example, psychologists emphasize construct validity, while political scientists tend to be more concerned with external validity. This happened, in my understanding, for path-dependent reasons in the history of the respective disciplines. Computer scientists in the social media space are extremely empirical, working to improve on objective metrics of predictive performance; communications scholars are primarily concerned with the refinement of theories.

The disconnect becomes apparent in the peer review process for interdisciplinary work. A paper might have three reviewers from three different disciplines and thus three distinct evaluative criteria. The zero-sum nature of this process is familiar to anyone working under a journal-imposed word limit and facing one reviewer who wants more theoretical development, another who demands a replication on a different population, and a third who needs to see a lot more detail about the measurement algorithm's performance.

The most common response to this incoherence is simply to avoid interdisciplinary work. Not exactly a ringing endorsement of the scientific process, but a reality of the career incentives facing social scientists today. But what if we actually cared? What if we had to argue it out: does every paper really need an explicit robustness check on every single one of the dimensions dreamed up by the union of all social science disciplines? Even within Economics, in the current causal-inference obsessed iteration, any self-respecting paper needs to have dreamed up three to five specific threats to the causal story with some ~numbers-style~ demonstrations of why those threats aren't a problem.

The intuitive (naïve) response is....yes, of course, we should be doing the most rigorous social science we can. We care about The Truth! If the high priests of psychometrics say that Truth is impossible without validating my survey battery, then so be it. If the econometric inquisitors say that I need to do a heckin endogeneity test, no problem -- anything for Truth.

Metascience is necessary because it provides a clear-eyed look at the trade-offs the above logic entails. If we want to hold everything to a higher standard of rigor --- for a fixed quantity of social-scientist hours and dollars --- we have to do fewer things.

The next step in the dialectic is the question of how we decide which things to do. This begins at the level of which concepts we use to understand the social world.

As I have written, I believe that analyses of “echo chambers” and “algorithmic radicalization” -- and at this point, “misinformation” -- are simply not useful. They tend to confuse more than they illuminate, and worse, to serve the interests of the very people that many scholars who use these terms believe they are holding to account.

Within the framework of validity valorized by positivist, non-meta social science, how can we register this point? Each paper is supposed to be evaluated in terms of whether it is “valid” or “accurate” or “true.”

A thought experiment: what paper could I write that would convince social scientists to stop saying the words “echo chamber”? A paper might claim that “echo chambers don’t exist” or “echo chambers exist for group X but not group Y”; the paper would have some data that we have been trained to understand as either sufficient or insufficient to justify this claim. But what data could I provide to justify the claim that “research about social media through the lens of “echo chambers” is not useful”?

I think it is useful to think about this problem as “academic agenda setting.” For research about social media, at least, the agenda is set primarily by current events, by the memes that shine a floodlight on one particular process or phenomenon.

In other words: social scientists have elected to allocate very little rigor to this stage of the scientific process. We are unlikely to be doing a good job of this simply because we aren’t trying to do a good job of it.

As my definition of Metascience emphasizes, formalizing this process would entail unavoidable tradeoffs. We would either have to allocate less rigor elsewhere or resign ourselves to studying fewer things. And we can’t discuss these tradeoffs without a goal, some shared sense of what we are trying to accomplish.

For all our talk about democracy, social science is a fundamentally undemocratic enterprise. Rather than deliberation and voting to decide on our shared goal, we are engaged in a decades-long war of attrition waged through academic hiring and peer review.

Perhaps this is the best we can do; perhaps more centralization would have unforeseen consequences for scientific innovation. Certainly, it would be less fun. I currently enjoy my ability to attack problems how I see fit. A more centralized system might deprive of the satisfaction from self-determination that comprises much of my overall compensation package .

But within the present system, conceptual confusion is inevitable. Without an agreement on what we’re trying to do, we lack a coherent framework for deciding how to allocate our scarce resources.

This problem will only become more apparent as the contradictions of the current paradigm pile up. Among the causal revolutionaries, an External Validity Crisis is only a few years out. Research papers about the internet and social media continue to be churned out, long after the motivating theoretical concepts have become obsolete. Metascientific discussion about goals and analysis of means will provide a pathway to keep pushing social science forward.

Subscribe to Never Met a Science

Political Communication, Social Science Methodology, and how the internet intersects each