Against Replication

Social Science Should Not Try To Be Natural Science

The maxim that "replication is the cornerstone of science" produces contradictions in the realm of social science.

An important contemporary vector for the unhelpful natural/social science confusion is the spillover from the more biological side of psychology to social psychology and then media effects, part of the political communication/psychology/behavior "trading zone." The methods are essentially the same: an experimental subject is placed in a lab and a stimulus is delivered. This description, intentionally, invokes the canonical image of natural science: a Scientist, with unruly gray hair and a white coat, mixes colorful chemicals in beakers, or shows bright lights to hairless apes and see what buttons they press.

It is thus unsurprising that social science reform is most advanced in this area. Other areas of inquiry have even more pressing issues to address. But social psych is close enough to natural science that it has been able to discover just how far away they still are. This is thus where reforms that aim to make replication more likely have come from: pre-registration, registered reports, etc.

Another and in my view more radical move is to *redefine* replication in order to square the reality of social science with the natural science tradition. Nosek and Errington (2020) argue that the "common understanding...of replication is intuitive, easy to apply, and incorrect." Instead of “repeating a study’s procedure and observing whether the prior finding recurs,” they assert that "Replication is a study for which any outcome would be considered diagnostic evidence about a claim from prior research."

This rhetorical move may lead to better scientific practice at the margin by sidestepping sometimes tedious debates about whether one experiment is an "exact replication" of another. It is certainly a move that allows social science to proceed without abandoning our natural science aspirations/delusions or to really change our practice very much.

But that doesn't make it a good move. I argue that this brazen re-definition is evidence of a paradigm in distress. Instead of re-defining dictionary words, why not accept that social science is not natural science and abandon the idea of replication as the cornerstone of social science?

In the canonical case, replication makes sense: other members of the scientific community can preform the same procedure and produce the same outcome. The problem lies in the deceptiveness of natural language: "perform the same procedure" is doing way too much work.

The *relevant causal factors* in the natural science experiment are very likely to be the same across instances. If you mix baking soda and vinegar, you get a cool little volcano --- presuming it isn’t being done at -1000 degrees C. But if you're mixing baking soda and vinegar, it is very likely that you are doing so in conditions amenable to human life!

Mixing baking soda and vinegar *on Pluto* would thus not count as "performing the same procedure" as doing so in an elementary school classroom. But this problem cannot arise.

There is a useful paper about the generalizability of the effect of giving parachutes to people jumping out of airplanes. The procedure---which was in fact carried out, god bless them---involved subjects jumping out of airplanes, either with or without parachutes. The outcome was whether or not the subject survived.

The gimmick is that the airplane was on the ground!

The humor comes from linguistic confusion: when we read about jumping out of airplanes and parachutes, we assume that the airplanes are *in the air*. The causal model is that falling thousands of feet causes death, but that parachuting thousands of feet generally does not.

I am drawing a sharp distinction between natural and social science. It makes intuitive sense from the lens of both the history of Western science (natural science has enabled radical technological advances without which contemporary life would be impossible; quantitative social science recently discovered that most research more than 30 years old is uselessly p-hacked, endogenous or worse) and divisions that structure contemporary academic institutions.

Although the scolds who know too much about STS or Latour or Philosophy of Science might say that everything I'm saying about social science also applies to natural science, I think it is simpler and more useful, as a social scientist, to simply say that yes natural science is cool and good but we're dealing with something else entirely.

My claim is that it is *much less likely* for "perform the same procedure" to be a coherent/well-defined practice in social science than natural science. In the classic Hotz et al (2005) on generalizability, they consider the case of a jobs training program. What is the effect of a "jobs training program" on subjects' career outcomes? It turns out that even when different cities attempt to "perform the same procedure," there are simply too many causally relevant factors that vary from instance to instance: class size, teacher skill, time of day, etc. They call this problem "Treatment heterogeneity," and the general problem falls under the "T-validity" component of external validity identified by Egami and Hartman. For social science "procedures" that matter, it is almost never the case that the classic definition of "replication" makes sense."

Within political science and communication, there is a glaring exception that proves the rule: media effects research. Due to the wonders of digital reproduction, it is trivial to deliver *exactly the same treatment* to subjects in a single study, or in a replication study. Because of this incredible advantage, methods researchers in this area have been able to discover new sources of causally-relevant variation, making our experiments ever-more verisimilitudinous. My favorite example is when Arceneaux and Johnson set up an experimental setting (in a mall!) complete with couch and remote control in order to conduct a media effects experiment that took seriously the experience and agency of the normal television viewer.

To be clear: these kind of as-yet-untheorized-and-thus-unmeasured context (C-Validity) variations exist in every area of inquiry. And they are important. We just haven't been able to discover them because they are swamped by other sources of variation in either T- or X-Validity (the pool of subjects vs target population).

Here again, the fact that immediate stimulus-response research like most social psychology and media effects has been at the vanguard of science reform is an example of the non-generalizability of metascience: we cannot trivially extend conclusions about how to reform social science by looking only at the areas where it is easiest to experiment with science reform. This is a meta-version of what Allcott (2015)—among the most damning problem for field experimentation generalizability—calls “site selection bias.” The areas where it’s easiest to run an experiment are non-representative of all areas we’d like to know about; for one thing, we know they’re easier to run experiments on!

I’ll argue that a prominent paper by Coppock, Leeper & Mullinix (2015)—“Generalizability of heterogeneous treatment effect estimates across samples”— is an example of meta-site selection bias:

even in the face of differences in sample composition, claims of strong external validity are sometimes justified. What could explain the rough equivalence of sample average treatment effects (SATEs) across such different samples? We consider two possibilities: (A) effect homogeneity across participants such that sample characteristics are irrelevant, or (B) effect heterogeneity that is approximately orthogonal to selection. Arbitrating between these explanations is critical to predicting whether future experiments are likely to generalize.

We aim to distinguish between scenarios A and B through reanalyses of 27 original–replication pairs…. This set of studies is useful because it constitutes a unique sample of original studies conducted on nationally representative samples and replications performed on convenience samples [namely, Amazon Mechanical Turk (MTurk)] using identical experimental protocols.

My claim is that a paper titled “Generalizability of heterogeneous treatment effect estimates across samples” which finds that

Our results indicate that even descriptively unrepresentative samples constructed with no design-based justification for generalizability still tend to produce useful estimates not just of the SATE but also of subgroup CATEs that generalize quite well.

could only be published in PNAS if “using identical experimental protocols” is a coherent concept. For most of the “sites” of empirical social science research, this is not the case.

(The discussion section of the paper is extremely clear on this point:

this discussion of generalizability has been focused exclusively on who the subjects (or units) of the experiments are and how their responses to treatment may or may not vary. The “UTOS” framework identifies four dimensions of external validity: units, treatments, outcomes, and setting. In our study, we hold treatments, outcomes, and setting constant by design. We ask “What happens if we run the same experiment on different people?” but not “Are the causal processes studied in the experiments the ones we care about outside the experiments?” This second question is clearly of great importance but is not one we address here.

)

So. This finding about generalizability suggests that “running the same experiment on different people” produces the same result. I’m arguing that this finding is one of the only instances in which “running the same experiment on different people” is a coherent concept in political science. That’s precisely why this was the instance in which we see the first generalizability results—it’s the easiest instance in which to find them. Textbook “site selection bias.” (To be clear the use of “bias” has zero normative connotations!)

Is this excellent work? Absolutely top-notch. Is it plausible to start here? For sure.

What should we infer about the generalizability of this radically non-representative generalizability result? Almost nothing.

To be fair to Nosek and Errington (2020), my argument agrees with their diagnosis completely, and their paper is a great introduction to the problem; I encourage everyone to read it.

I just disagree with their semantic solution. "Replication" is simply not a very useful concept for us.

Social science should accept that we are not natural science. "Preform the same procedure" is *almost always* a coherent concept in natural science and *almost never* a coherent concept in social science as it currently exists. The general response to the replication crisis has been to try to make “preform the same procedure” a coherent concept in more of social science, to bend our practice to some ideal of what Science should be.

“Replication” is simply too central a concept, too common a word, for their admittedly elegant stab at redefinition to succeed, sociologically. It also carries connotative baggage about how we should allocate our scarce social science resources—that is, it affects our metascientific priors in ways with which I disagree.

Assume we have the resources to run 1,000 experiments a year. We have 100 contexts, 100 treatments, 100 samples and 100 outcomes. [This is C-, T-, X-, and Y-Validity, respectively]. If we try to brute force it, non-parametrcially, we’ll have figured out human behavior in 100 million years.

But what do we do in the next 10 years?

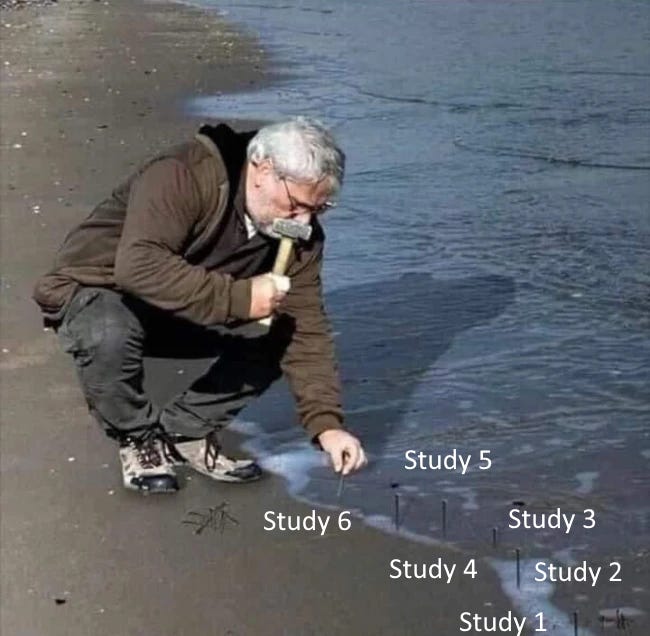

Replication implies trying to spend our limited resources running many experiments that are *as close to each other as possible*. We come upon some combination of context, treatment, sample and outcome that produces a significant result and then carefully explore the result of the combinatorial space to see whether it “replicates,” perhaps iteratively discovering sources of variation.

This is the spirit of the ManyLabs or Metaketa initiatives. Ironically, this only makes sense if you accept that *replication is impossible*. If replication were possible, it would be silly to "preform the same procedure" (hold Treatment and Outcome fixed) in many different Contexts and Samples—we’d just get the same result over and over again. These efforts are valuable precisely because everyone knows that “preform the same procedure” will produce different (and interesting!) results.

So I think we should embrace the opposite ethos and randomize everything, we should use our limited resources to explore a much larger but less compact region of the combinatorial space. This is the approach advocated by Tal Yarkoni in his phenomenal paper on the Generalizability Crisis: the Radical Randomization approach like that used in Baribault et al (2018), who “randomly varied 16 different experimental factors in a large multisite replication (6 sites, 346 subjects, and nearly 5,000 “microexperiments”) of a subliminal priming study.” My intuition is that we should go even farther and not talk about “replication” at all; the Baribault et al approach holds fixed the “treatment.”

The Conjoint Experiment, for example, is a triumph of Radical Randomization—and the reason why it has thus far only been applied to almost exclusively to digital media stimuli is that experiments using digital media stimuli are radically non-representative of all experiments.

(A related approach, perhaps somewhere in the middle, is the “purposive variation” formalized and advocated for by Egami and Hartman.)

My Radical Randomization approach will only work if we believe that subjective human brains can be put into the loop, of course. Even in the conjoint, researchers tend to use their noggins to rule out impossible combinations of characteristics like the lawyer with only a high school degree. Radical Randomization over nonsensical combinations should be ruled out — more generally, this Radical Randomization should not be true randomization but weighted randomization, where social scientists impose and update the weights. Social Science as a Multi-Armed Bandit, with humans in the loop. We need to believe that social scientists are capable of acquiring qualitative aka theoretical knowledge about what we’re studying.

This is, as I said, my intuition; a weakly held belief. But how do we go about deciding which approach is best, the “replication approach” or the “radical randomization?”

The former approach is what we’re doing, and it could well be correct. I am open to being convinced. But I believe that replication is the cornerstone of natural science, social science is like natural science, therefore social science needs replication is not a good argument.

Instead, I believe that we need meta-scientific arguments. We should accept that social science faces resource constraints and that to argue about how to allocate those resources requires some goal, some way of learning whether our allocation is good or bad.

A final point. There has been (in my incredibly biased view) a renaissance of interest in philosophy of science, prompting people making meta-scientific arguments about the future of social science to invoke the authors they read in grad school: Popper, Kuhn, and Lakatos.

The problem is that these invocations by social scientists are begging the question of whether there is a distinction between natural and social science.

As Yarkoni points out in a withering response to Lakens' doctrinaire invocation of Popper and Lakatos, the latter *never even considered the possibility that his framework would apply to social science*. Indeed, Yarkoni cites a rare instance in which Lakatos considers the social sciences (italics from Lakatos):

This requirement of continuous growth … hits patched-up, unimaginative series of pedestrian “empirical” adjustments which are so frequent, for instance, in modern social psychology. Such adjustments may, with the help of so-called “statistical techniques” make some “novel” predictions and may even conjure up some irrelevant grains of truth in them. But this theorizing has no unifying idea, no heuristic power, no continuity. They do not add up to a genuine research programme and are, on the whole, worthless1.

If we follow that footnote 1 after “worthless,” we find this:

After reading Meehl (1967) and Lykken (1968) one wonders whether the function of statistical techniques in the social sciences is not primarily to provide a machinery for producing phoney corroborations and thereby a semblance of “scientific progress” where, in fact, there is nothing but an increase in pseudo-intellectual garbage. It seems to me that most theorizing condemned by Meehl and Lykken may be ad hoc. Thus the methodology of research programmes might help us in devising laws for stemming this intellectual pollution.

….Some of the cancerous growth in contemporary social ‘sciences’ consists of a cobweb of such [ad hoc] hypotheses.

Given that Lakens wrote in his review that he is “on the side of Popper and Lakatos” and “would also greatly welcome learning why Popper and Lakatos are wrong,” I imagine he greatly welcomed this passage. Or else, perhaps, he continues to think that Lakatos is right, and that quantitative social psychology is “worthless…intellectual pollution.”

My point is that the current meta-scientific moment offers social scientists an opportunity to radically reconceptualize what we’re doing. Scientism—the rote application of the methods of the natural sciences to inappropriate areas of inquiry—is in my opinion our original sin. With each new discovery of a way in which the study of human behavior does not live up to our idealized notion of Science, we despair and further contort ourselves into an unnatural posture.

Maybe it’s worth it. Maybe it’s not.

My question is: how can we know?